What Actually Works in AI: And Why No One Talks About It

The EU Parliament just published another AI strategy document. €200 billion for "gigafactories." Race to catch America. Same story, different quarter. Brussels seems to like chasing us.

Meanwhile, something interesting is happening on the ground that Brussels seems to miss entirely. But perhaps we miss that too. And we can’t afford to!

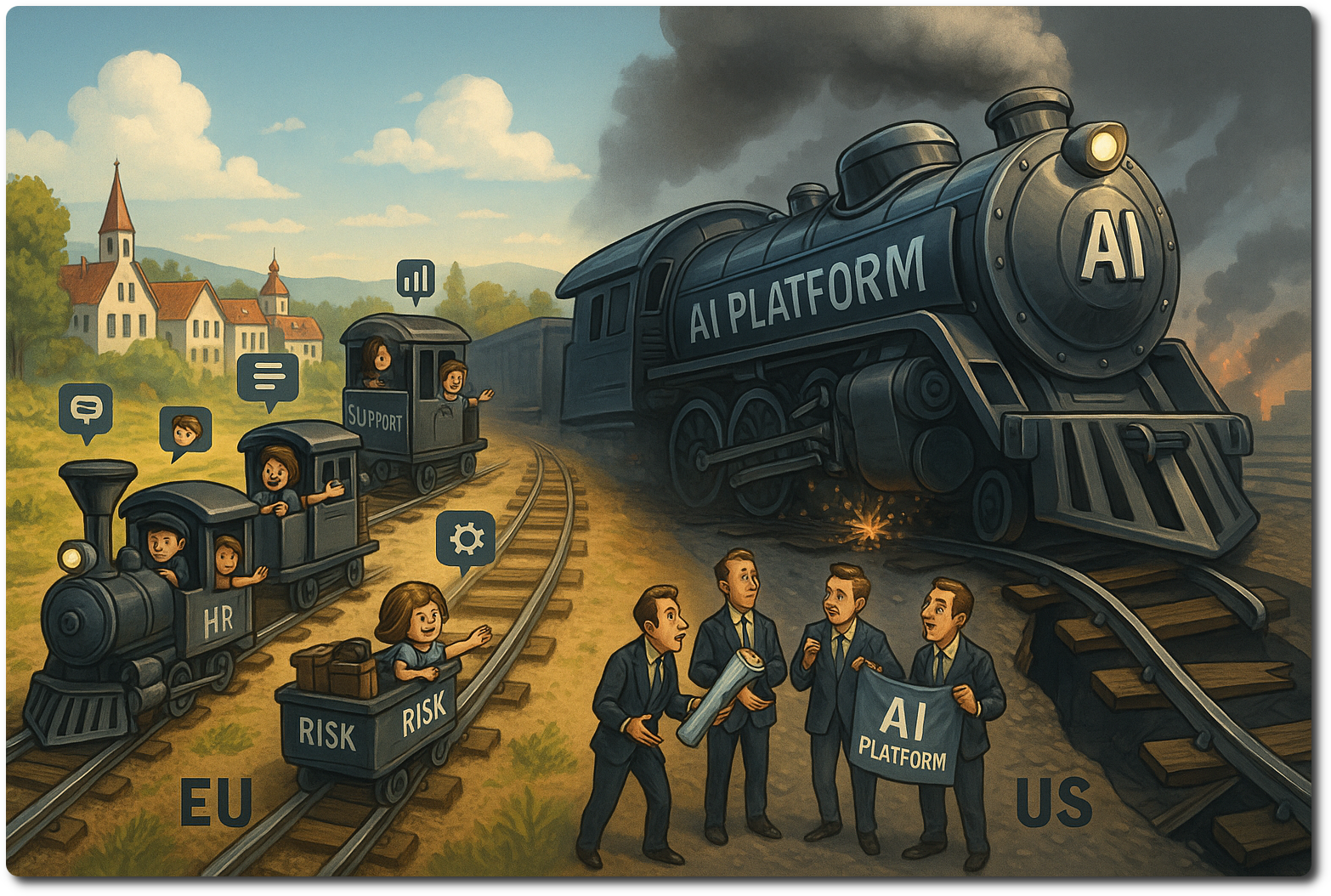

Two playbooks. One works much better.

In America, AI adoption means: pick a hyperscaler (AWS, GCP, Azure), consume hosted models, pay per token, lock yourself in. Big budgets. Big vendors. Big dependencies. The enterprise default.

It is so abundant that I recommend studying on this infrastructure FOR FREE.

In Europe — when companies actually commit rather than simply announce to get counted — I see something very different: open-source infrastructure, locally-hosted models, tiny teams (3-5 specialists), fine-tuned to actual domain needs. No dependency. No lock-in. Lower cost. Higher success rate.

The American approach makes great press releases.

The European approach just ships working product.

I’ve seen this pattern before.

In 2023, I watched Ukrainian logistics networks solve an impossible problem: keep a country fighting with fractured supply chains. Their solution wasn’t centralized control. It was distributed commitment — thousands of small makers, validation only at transaction commit, late onboarding welcome.

By 2025, that pattern migrated south. Rheinmetall’s supply chain looks nothing like 2022. Instead of few large sources, they now coordinate with 1,250+ small shops. Monsters like Rheinmetall, KMDB, and BAE operate something like a single organism with thousands of small manufacturers; servicing equipment nobody designed, building systems nobody envisioned. Frankensteined weapon platforms from bulldozers to technicals.

This isn’t chaos. It’s architectural resilience. Small, distributed, domain-expert nodes. Sound familiar?

World’s leading economic research institutions are already writing books about it. China is paying close attention. But in the USA we’re oblivious to this development.

Europe is changing fast. We’re not.

The AI parallel is exact.

The companies I see succeeding with AI in Europe aren’t waiting for gigafactories. They’re:

-

Running Mistral or Llama variants on their own infrastructure;

-

Fine-tuning small models for specific domain problems;

-

Deploying with teams of 3-5 people who understand both the tech and the business;

-

Iterating weekly, not quarterly;

-

Spending 10% of what American competitors spend, getting better and cheaper results.

No vendor lock-in. No token bills scaling to infinity. No "our AI strategy depends on OpenAI’s roadmap." No chasing the latest model — chasing profit instead.

Why doesn’t anyone talk about this?

Because it’s boring. "German manufacturer deploys fine-tuned 7B model with 11-person team" doesn’t make headlines. "EU announces €200B AI gigafactory initiative" does.

But one of these approaches actually works at enterprise scale. The other creates great slide decks. One of these ways survives AI bubble crash. The other does not.

Disclosure: My Lens

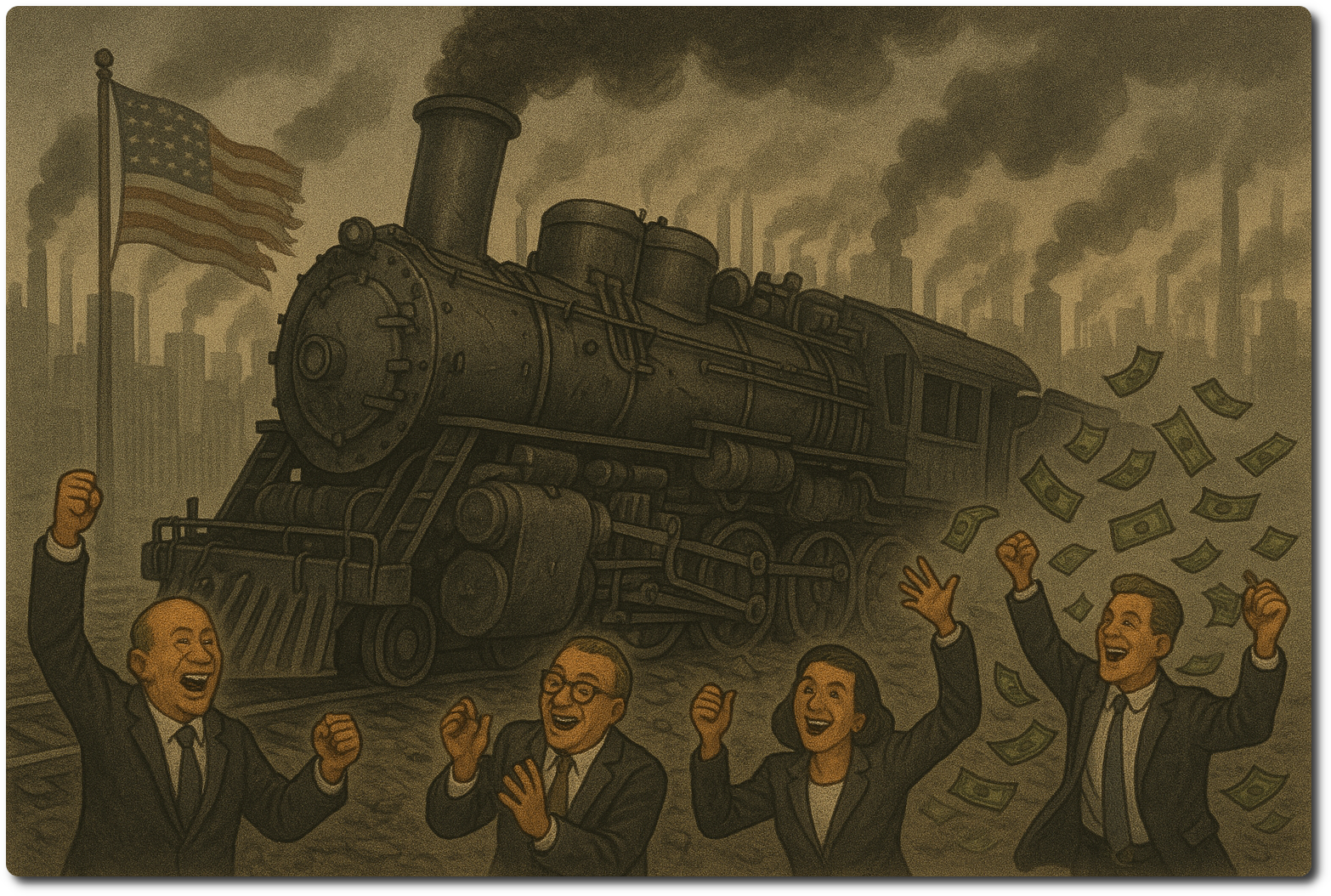

Scale-then-profit usually works. For American AI adoption — I’m not seeing it yet.

My US numbers come from Open Intelligence reports. European numbers I get firsthand — I’ve reviewed 100+ EU implementations. Almost all generate revenue today. Not "spend now to save later."

The pattern difference: EU projects start with a business problem where AI fits naturally. US projects start with "where else can we stick AI and trumpet about it?"

I’m a small consultancy. In America, big is the game — I struggle to sell. In Europe, I sell on competence and reputation. That’s my bias. Take it as you will.

But the results speak: one approach ships profit. The other ships press releases.

The uncomfortable question for executives:

Are you building AI capability? Or are you buying AI dependency? Are you chasing AI-Bubbles? Or are you solving business problems?

The distributed, domain-specific, small-team approach isn’t just European pragmatism. It’s sound architecture. It’s what actually survives contact with production. And it used to be an American Thing!

The distributed way has only one dependency: competence!

I’ve seen both playbooks. I’ve built with both. One creates long-term capability. The other creates long-term invoices.

Your choice.

For All:

Hands down the best explanation on LLM evolution is by JetBrains: Why LLMs Are Finally Useful.

I’m excited to share it with you here.

For Developers:

Several key evolutions have just taken place. Some of these developments boost the American way of doing AI. And some really boost the competent side of things Europeans like. I will link the best ones here:

The USA-specific boosters:

-

Gemini 3 is part of GCP now: YouTube @Fireship: Did Google just kill OpenAI?;

-

Amazon Bedrock adds 18 fully managed open weight models… — match self-hosted folks.

The EU-specific boosters:

Note: Requirements for Qwen3-30B-A3B-Thinking-2507 are overstated — try it before you panic.

Fun stuff:

JetBrains Advent of Code 2025 is 3 days in!

Leave a comment